Edition Nov 16th-17th, 2018

Vertical Innovation Hackathon

F-O-O-D Team

Winner of the ACS Data Systems AG challenge

Facial recognition desktop tool analyzing webcam data and web photos

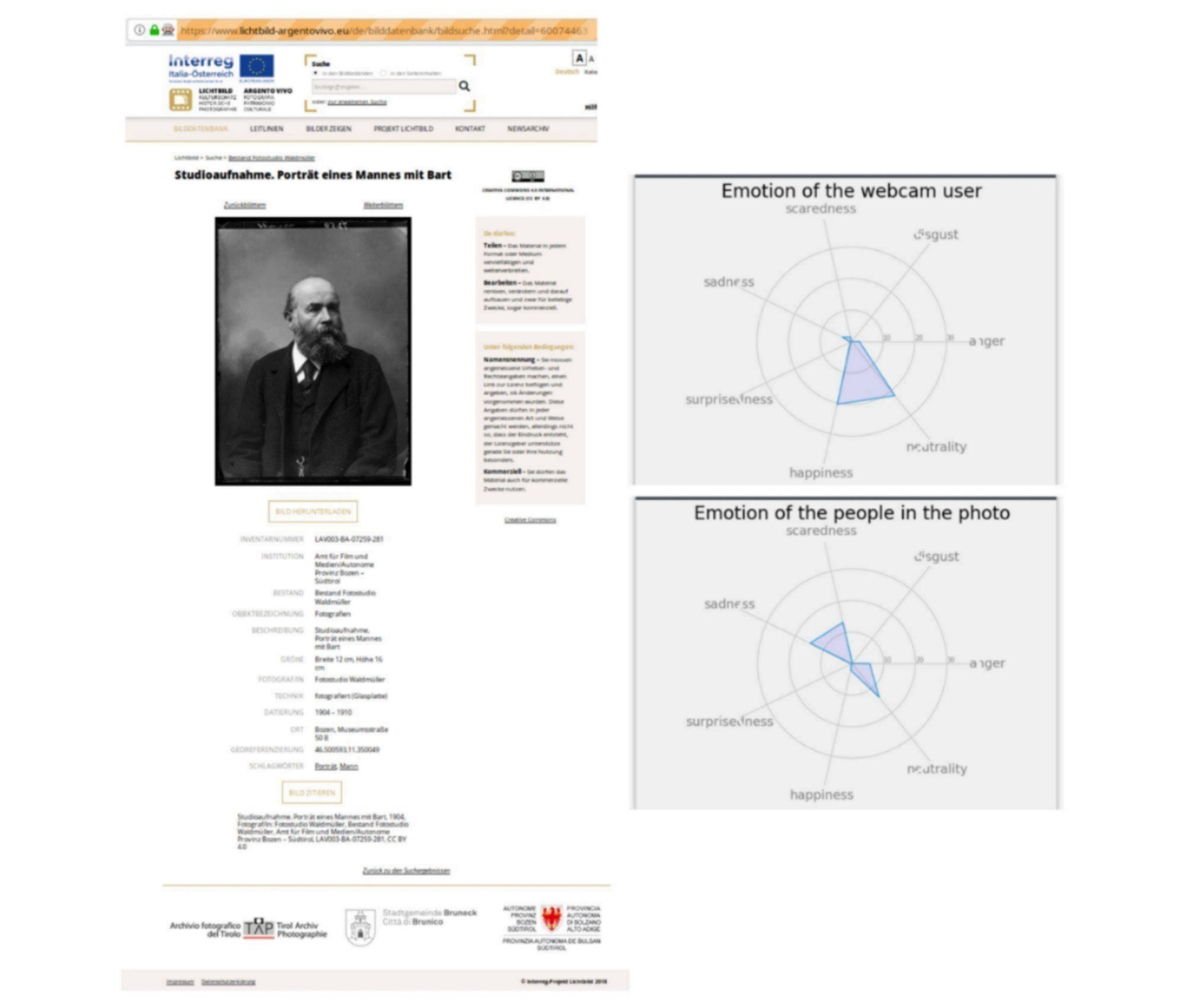

While users observe pictures on the Internet both their facial expressions as well as the ones of people in the photos are analyzed to detect emotions.

Based on emotion recognition we can analyze what emotions are triggered: anger, disgust, scare, happiness, sadness, surprise and neutrality when presenting the photo content from the Internet

The same algorithm is applied to the photo content itself if there are faces in the picture displayed on the current website opened in the webbrowser (so far, focused on the South-Tyrolian Lichtbild-ArgentoVivo photo archive).

We compare both results (emotion of the user and emotion of the people in the photo) using radar charts to see if there is consistency between the detected emotions.

Story of the project

At the beginning we made individual voting processes to decide which project fits us the best. Each of us could vote for three favorite projects. We came to the conclusion that we could propose a solution for two challenges: one offered by ACS Data Systems AG and another from Axiell.

To validate our approach, we have used photo galleries from the project “Lichtbild. Argento Vivo” (CC-BY license), especially photographs that represent portraits of people. Therefore we keep the historical heritage and promote the idea of free and democratic access to historical information about the Autonomous Province of Bolzano - South Tyrol.

Our second approach was to analyze and understand the users' behavior and traits when interacting with photo galleries. For this reason we have implemented a real-time emotion recognition tool. Using non-intrusive technologies we try to understand the person interacting with the multimedia content while browsing the web.

Another use-case could be to provide information for sociological studies when the results of the emotion analysis could be related to certain regions or points in time (the photos from the Lichtbild archive are tagged with this information) to explore possible patterns.

Technology:

We implemented a Python program which gets the data from the webcam and provides real-time information about the user's current emotion based on open-source projects like Tensorflow, Keras and OpenCV. Simultaneously the same process is applied to the current webpage shown to the user, specifically if there are pictures showing persons' faces displayed in the webbrowser. The outcome is represented as radar charts and stored in an SQLite database. The color coding for each data set in the final dashboard showing the database entries will help to visually correlate and contrast the emotions over its diverse aspects.